Autoencoders¶

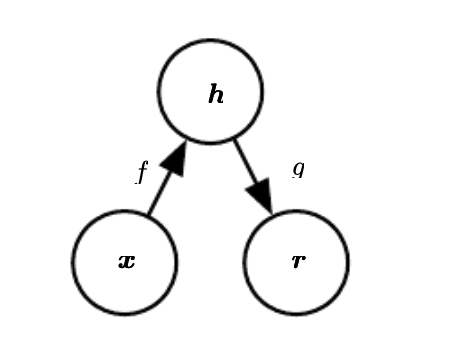

neural network that attempts to copy its input to its output.

internally it has a hidden layer \(h\), that describe the code that represents the input

It contains two parts:

Encoder \(h = f(x)\)

Decoder \(r = g(h)\)

The idea is to learn \(g(f(x)) \approx x\), where we do not reconstruct the input perfectly. This forces to learn aspects of the data that are most important.

we can connect autoencoders to latent variable models

encoder and decoder can be an neural network.

Under-complete autoencoders¶

We want the decoder encoder pair not to learn the identity function: \(g(f(x)) \ne I(x)\). In the undercomplete autoencoder this is achieved by constraining the hidden code to be lower dimensional than the input.

Regularized autoencoder¶

We allow large hidden code \(h\) but we add some penalty to avoid overfitting.

Stochastic Autoencoder¶

Booth encoder and decoder is an stochastic model.

Denosing Autoencoder (DAE)¶

Here we learn a encoder function that that takes an corrupted input and the networks tries to remove this corruption.

Contractive autoencoders¶

We regularize the hidden code \(h=f(x)\) encouraging the derivatives of \(f\) to be as small as possible.

Applications¶

dimension reduction

information retrieval