Linear discriminant analysis¶

It is Gaussian model for classification that is similar to Quadratic discriminant analysis where we assume Gaussian distribution for each class, but instead of having specific covariacne matrix for each class \(\Sigma_c\) we defined an shared covariance matrix \(\Sigma\).

The quadratic form:

is class independent, and it will cancel out the denominator if we define:

Hence we get:

Where:

\(\eta = [B^T_1x + \gamma_1, \cdots, B^T_C x + \gamma_c]\)

\(\mathcal{S}\) is the softmax function

For detailed derivation from QDA to LDA

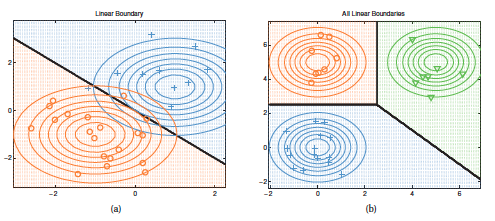

In the context of LDA, if we would take the log of the softmax function we would get a linear function, thus this makes the decision boundary linear.

MLE¶

If we maximize the log likelihood we get:

Regularization¶

Mle tends to be ill conditioned (covariance matrix is singular) in high dimensional settings, thus we have to introduce some mechanism to avoid overfitting.

Regularization¶

We perform MAP estimation on \(\Sigma\) using a inverse Wishart Prior of the from \(IW(diag (\hat{\Sigma}_{MLE}), v_0)\) hence we have:

Where:

\(\lambda\) control the amounth of regularizaton.

\(X\) is the design matrix

It is impossible to compute \(\hat{\Sigma}_{MLE}\) if \(D > N\), (Wide short matrix). But we can use SVD of X.

\(X = UDV^T\)

Diagonal LDA¶

If we use regularized LDA but we set \(\lambda = 1\) then we get a special variant called Diagonal LDA